Explain and exemplify UTF-8, UTF-16, and UTF-32 3 – Objects, Immutability, Switch Expressions, and Pattern Matching

By Adenike Adekola / May 15, 2022 / No Comments / Exams of Java, Getting integral and fractional parts from a double, Java Certifications, Returning the flooring/ceiling modulus

Unicode

In a nutshell, Unicode (https://unicode-table.com/en/) is a universal encoding standard capable to encode/decode every possible character in the world (we are talking about hundreds of thousands of characters). So, people wanted global compatibility.Unicode needs more bytes to represent all these characters. But, Unicode didn’t get involved in this representation. It just assigned a number to each character. This number is named code point. For instance, the letter A in Unicode has associated the code point 65 in decimal, and we refer to it as U+0041. This is the constant U+ followed by 65 in hexadecimal. As you can see, in Unicode, A is 65, exactly as in the ASCII encoding. In other words, Unicode is backward compatible with ASCII. As you’ll see soon, this is big, so keep it in mind! Early versions of Unicode contain characters having code points less than 65,535 (0xFFFF). Java represents these characters via the 16-bit char data type. For instance, the French ê (e with circumflex) has associated the Unicode 234 decimal or U+00EA hexadecimal. In Java, we can use charAt() to reveal this for any Unicode character less than 65,535:

int e = “ê”.charAt(0); // 234

String hexe = Integer.toHexString(e); // ea

We also may see the binary representation of this character:

String binarye = Integer.toBinaryString(e); // 11101010 = 234

Later, Unicode added more and more characters up to 1,114,112 (0x10FFFF). Obviously, the 16-bit Java char was not enough to represent these characters, and calling charAt() is not useful anymore.

Java 19+ supports Unicode 14.0. The java.lang.Character API supports Level 14 of the Unicode Character Database (UCD). In numbers, we have 47 new emoji, 838 new characters, and 5 new scripts.

However, Unicode doesn’t get involved in how these code points are encoded into bits. This is the job of special encoding schemes within Unicode such as the Unicode Transformation Format (UTF) schemes. Most commonly, we use UTF-32, UTF-16, and UTF-8.

UTF-32

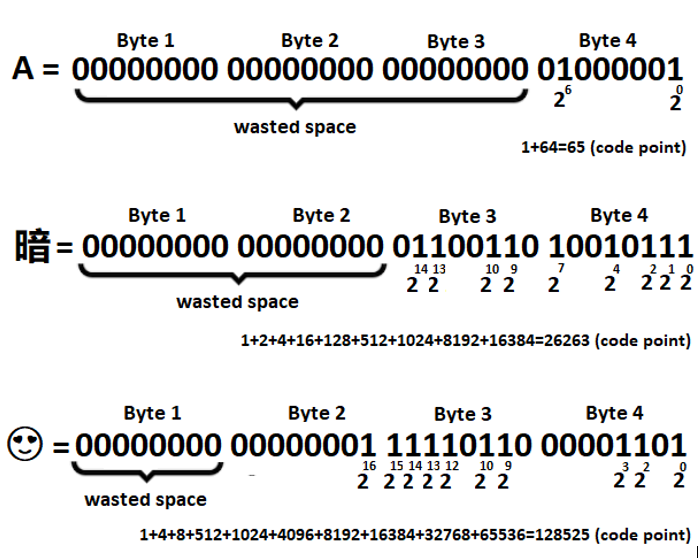

UTF-32 is an encoding scheme for Unicode that represent every code point on 4 bytes (32 bits). For instance, the letter A (having code point 65), which can be encoded on a 7-bit system, is encoded in UTF-32 as in the following figure next to the other two characters:

Figure 2.4 – Three characters sample encoded in UTF-32

As you can see in figure 2.4, UTF-32 uses 4 bytes (fixed length) for representing every character. In the case of letter A, we see that UTF-32 wasted 3 bytes of memory. This means that converting an ASCII file to UTF-32 will increase its size by 4 times (for instance, a 1KB ASCII file is a 4KB UTF-32 file). Because of this shortcoming, UTF-32 is not very popular.Java doesn’t support UTF-32 as a standard charset but it relies on surrogate pairs (introduced in the next section).